Journal Publications

FOVEA: a new program to standardize the measurement of foveal pit morphology

Authors: Bret A. Moore, Innfarn Yoo, Luke P. Tyrrell, Bedrich Benes, and Esteban Fernandez-Juricic

Abstract

The fovea is one of the most studied retinal specializations in vertebrates, which consists of an invagination of the retinal tissue with high packing of cone photoreceptors, leading to high visual resolution. Between-species, foveae differ morphologically in the depth and width of the foveal pit and the steepness of the foveal walls, which could influence visual perception. However, there is no standardized methodology to measure the contour of the foveal pit across species. We present here FOVEA, a program for the quantification of foveal parameters (width, depth, slope of foveal pit) using images from histological cross-sections or optical coherence tomography (OCT). FOVEA is based on a new algorithm to detect the inner retina contour based on the color variation of the image. We evaluated FOVEA by comparing the fovea morphology of two Passerine birds based on histological cross-sections and its performance with data from previously published OCT images. FOVEA detected differences between species and its output was not significantly different from previous estimates using OCT software. FOVEA can be used for comparative studies to better understand the evolution of the fovea morphology in vertebrates as well as for diagnostic purposes in veterinary pathology. FOVEA is freely available for academic use and can be downloaded at: http://estebanfj.bio.purdue.edu/fovea

Motion Style Retargeting to Characters with Different Morphologies

Authors: Michel Abdul Massih, Innfarn Yoo, and Bedrich Benes

Abstract

We present a novel approach for style retargeting to non-humanoid characters by allowing extracted stylistic

features from one character to be added to the motion of another character with a different body morphology.

We introduce the concept of groups of body parts (GBP), for example the torso, legs and tail, and we argue that

they can be used to capture the individual style of a character motion. By separating GBPs from a character, the

user can define mappings between characters with different morphologies. We automatically extract the motion

of each GBP from the source, map it to the target, and then use a constrained optimization to adjust all joints in

each GBP in the target to preserve the original motion while expressing the style of the source. We show results

on characters that present different morphologies to the source motion from which the style is extracted. The style

transfer is intuitive and provides a high level of control. For most of the examples in this paper the definition of

groups of body parts takes around five minutes and the optimization about seven minutes on average. For the most

complicated examples, the definition of three GBP and their mapping takes about ten minutes and the optimization

another thirty minutes.

Authors: Innfarn Yoo, Michel Abdul Massih, Illia Ziamtsov, Raymond Hassan, and Bedrich Benes

Abstract

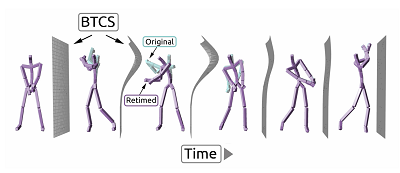

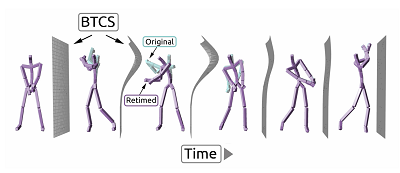

Motion retiming is an important tool used to edit character animations.

It consists of changing the time at which an action occurs during an animation.

One example might be editing an animation of a person playing football to change

the precise time at which the ball is kicked. It is, nevertheless, a non-trivial

task to retime the motion of a set of joints, since spatio-temporal correlation

exists among them. It is especially difficult in the case of motion capture,

when there are forward kinematic keys on every frame, to define the motion.

In this paper, we present a novel approach to motion retiming that exploits

the proximity of joints to preserve the motion coherence when a retiming

operation is performed. We introduce the Bilateral Time Control Surface (BTCS),

a framework that allows users to intuitively and interactively retime motion.

The BTCS is a free-form surface, located on the timeline, that can be interactively

deformed to move the action of a particular joint to a certain time,

while preserving the coherency and smoothness of surrounding joints.

The animation is retimed by manipulating successive BTCSs, and the final

animation is generated by resampling the original motion by time spans defined by the BTCSs.

Authors: Shengchuan Zhou, Innfarn Yoo, Bedrich Benes, and Ge Chen

Abstract

A novel hybrid level-of-detail (LOD) algorithm is introduced.

We combine point-based, line-based, and splat-based rendering to synthesize large-scale urban city images.

We first extract lines and points from the input and provide their simplification encoded in a data structure

that allows for a quick and automatic LOD selection. A screen-space projected area is used as the LOD selector.

The algorithm selects lines for long-distance views providing high contrast and fidelity of the building silhouettes.

For medium-distance views, points are added, and splats are used for close-up views. Our implementation shows

a 10 × speedup as compared with the ground truth models and is about four times faster than geometric LOD.

The quality of the results is indistinguishable from the original as confirmed by a user study and two algorithmic metrics.

Authors: Innfarn Yoo, Juraj Vanek, Maria Nizovtseva, Nicoletta Adamo-Villani, and Bedrich Benes

Abstract

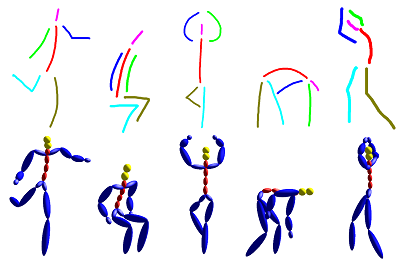

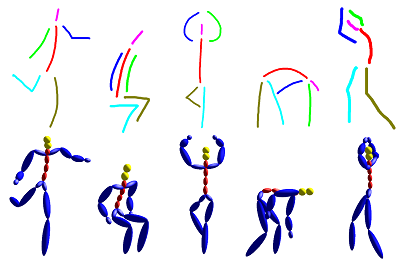

Quick creation of 3D character animations is an important task in game design,

simulations, forensic animation, education, training, and more.

We present a framework for creating 3D animated characters using a simple

sketching interface coupled with a large, unannotated motion database

that is used to find the appropriate motion sequences corresponding to the input sketches.

Contrary to the previous work that deals with static sketches,

our input sketches can be enhanced by motion and rotation curves that improve matching

in the context of the existing animation sequences.

Our framework uses animated sequences as the basic building blocks of the

final animated scenes, and allows for various operations with them such as trimming,

resampling, or connecting by use of blending and interpolation.

A database of significant and unique poses, together with a two-pass search running on the GPU,

allows for interactive matching even for large amounts of poses in template database.

The system provides intuitive interfaces, an immediate feedback, and poses very small

requirements on the user. A user study showed that the system can be used by novice

users with no animation experience or artistic talent, as well as by users with an

animation background. Both groups were able to create animated scenes consisting of

complex and varied actions in less than 20 minutes.

Conference Talks

Massive Time-Lapse Point Cloud Rendering with VR

Abstract

We present our novel methods for visualizing massive scale time-lapse point cloud data, and navigating and handling point cloud VR. Our method provides new approaches for real-time rendering of 120 GB time-lapse point cloud data, and targeted to apply our method to 2 TB data. Our method includes solutions for color mismatching, registration, out-of-core design, and memory management.

The presentation slides can be downloaded from GTC 2016 webpage:

Presentation Slides

Conference Posters

Motion Retiming using CUDA and Smooth Surfaces

Sketching 3D Animations using CUDA

Motion retiming is an important tool used to edit character animations.

It consists of changing the time at which an action occurs during an animation.

One example might be editing an animation of a person playing football to change

the precise time at which the ball is kicked. It is, nevertheless, a non-trivial

task to retime the motion of a set of joints, since spatio-temporal correlation

exists among them. It is especially difficult in the case of motion capture,

when there are forward kinematic keys on every frame, to define the motion.

In this paper, we present a novel approach to motion retiming that exploits

the proximity of joints to preserve the motion coherence when a retiming

operation is performed. We introduce the Bilateral Time Control Surface (BTCS),

a framework that allows users to intuitively and interactively retime motion.

The BTCS is a free-form surface, located on the timeline, that can be interactively

deformed to move the action of a particular joint to a certain time,

while preserving the coherency and smoothness of surrounding joints.

The animation is retimed by manipulating successive BTCSs, and the final

animation is generated by resampling the original motion by time spans defined by the BTCSs.

Motion retiming is an important tool used to edit character animations.

It consists of changing the time at which an action occurs during an animation.

One example might be editing an animation of a person playing football to change

the precise time at which the ball is kicked. It is, nevertheless, a non-trivial

task to retime the motion of a set of joints, since spatio-temporal correlation

exists among them. It is especially difficult in the case of motion capture,

when there are forward kinematic keys on every frame, to define the motion.

In this paper, we present a novel approach to motion retiming that exploits

the proximity of joints to preserve the motion coherence when a retiming

operation is performed. We introduce the Bilateral Time Control Surface (BTCS),

a framework that allows users to intuitively and interactively retime motion.

The BTCS is a free-form surface, located on the timeline, that can be interactively

deformed to move the action of a particular joint to a certain time,

while preserving the coherency and smoothness of surrounding joints.

The animation is retimed by manipulating successive BTCSs, and the final

animation is generated by resampling the original motion by time spans defined by the BTCSs.

Quick creation of 3D character animations is an important task in game design,

simulations, forensic animation, education, training, and more.

We present a framework for creating 3D animated characters using a simple

sketching interface coupled with a large, unannotated motion database

that is used to find the appropriate motion sequences corresponding to the input sketches.

Contrary to the previous work that deals with static sketches,

our input sketches can be enhanced by motion and rotation curves that improve matching

in the context of the existing animation sequences.

Our framework uses animated sequences as the basic building blocks of the

final animated scenes, and allows for various operations with them such as trimming,

resampling, or connecting by use of blending and interpolation.

A database of significant and unique poses, together with a two-pass search running on the GPU,

allows for interactive matching even for large amounts of poses in template database.

The system provides intuitive interfaces, an immediate feedback, and poses very small

requirements on the user. A user study showed that the system can be used by novice

users with no animation experience or artistic talent, as well as by users with an

animation background. Both groups were able to create animated scenes consisting of

complex and varied actions in less than 20 minutes.

Quick creation of 3D character animations is an important task in game design,

simulations, forensic animation, education, training, and more.

We present a framework for creating 3D animated characters using a simple

sketching interface coupled with a large, unannotated motion database

that is used to find the appropriate motion sequences corresponding to the input sketches.

Contrary to the previous work that deals with static sketches,

our input sketches can be enhanced by motion and rotation curves that improve matching

in the context of the existing animation sequences.

Our framework uses animated sequences as the basic building blocks of the

final animated scenes, and allows for various operations with them such as trimming,

resampling, or connecting by use of blending and interpolation.

A database of significant and unique poses, together with a two-pass search running on the GPU,

allows for interactive matching even for large amounts of poses in template database.

The system provides intuitive interfaces, an immediate feedback, and poses very small

requirements on the user. A user study showed that the system can be used by novice

users with no animation experience or artistic talent, as well as by users with an

animation background. Both groups were able to create animated scenes consisting of

complex and varied actions in less than 20 minutes.